References

Austin, J. L. (1962): How To Do Things With Words. Oxford: Clarendon Press.

Best, Stephen and Sharon Marcus (2009): Surface Reading: An Introduction. Representations 108 (1), 1-21.

Blumenberg, Hans. (1986): Die Lesbarkeit der Welt. Frankfurt a. Main: Suhrkamp.

Bogen, Steffen (2005): Schattenriss und Sonnenuhr. Überlegungen zu einer kunsthistorischen Diagrammatik. Zeitschrift für Kunstgeschichte 68 (2), 153-176.

Chia, X., Pumera, M. (2018): Characteristics and Performance of Two-dimensional Materials for Electrocatalysis. Nat Catal 1, 909–921. https://doi.org/10.1038/s41929-018-0181-7

Finlay, Steven (2014): Predictive Analytics, Data Mining and Big Data. Myths, Misconceptions and Methods. 1st ed. Palgrave Macmillan.

Flusser, Villém (1995): Lob der Oberflächlichkeit. Für eine Phänomenologie der Medien. 3rd ed. Mannheim: Bollmann.

Gombrich, Ernst H. ( 1995): Shadows: The Depiction of Cast Shadows in Western Art. London.

Gumbrecht, Hans and Ulrich, Ludwig Pfeiffer (eds.) (1994): Materialities of Communication. Stanford: Stanford UP.

Huhtamo, Erkki (2004): Elements of Screenology: Toward an Archaeology of the Screen. Iconics, Vol. 7.

Krämer, Sybille (2003): Writing, Notational Iconicity, Calculus: On Writing as a Cultural Technique. Modern Languages Notes - German Issue 118 (3), 518-537.

Krämer, Sybille (2015): Media, Messenger, Transmission. An Approach to Media Philosophy. Amsterdam: Amsterdam University Press.

Krämer, Sybille (2016a): Is There a Diagrammatic Impulse with Plato? ‘Quasi-diagrammatic-scenes’ in Plato’s Philosophy. In: Sybille Krämer and Christina Ljungberg (eds.), Thinking with Diagrams. Boston/Berlin: de Gruyter.

Krämer, Sybille (2016b): Figuration, Anschauung, Erkenntnis. Grundlinien einer Diagrammatologie. Berlin: Suhrkamp.

Krämer, Sybille (2021): Media as Cultural Techniques: From Inscribed Surfaces to Digital Interfaces. In: Jeremy Swartz/Janet Wasko (eds.), Media. A Transdisciplinary Inquiry, Bristol, UK/Chicago: intellect, pp. 77-86.

Krämer, Sybille (2022): Reflections on ‘Operative Iconicity’ and ‘Artificial Flatness.’ In: David Wengrow (ed.), Image, Thought, and the Making of Social Worlds. Freiburger Studien zur Archäologie & visuellen Kultur Vol. 3. Heidelberg: Propylaeum.

Krämer, Sybille (forthcoming): Should We Really ‘Hermeneutise’ the Digital Humanities? A Plea for the Epistemic Productivity of a ‘Cultural Technique of Flattening’ in the Humanities. In: “Theorytellings: Epistemic Narratives in the Digital Humanities” Journal of Cultural Analytics.

Latour, Bruno (1986): Visualisation and Cognition: Drawing Things Together. In H. Kuklick (ed.), Knowledge and Society Studies in the Sociology of Culture Past and Present, vol. 6. Jai Press, pp. 1-40.

Latour, Bruno (1999): Pandora’s Hope: Essays on the Reality of Science Studies. Cambridge, MA: Harvard University Press.

Leibniz, G.W. (1966): Herrn von Leibniz’ Rechnung mit Null und Eins. 3rd ed. Berlin/Munich: Siemens.

Linke, Angelika, Helmuth Feilke (eds) (2009): Oberfläche und Performanz. Untersuchungen zur Sprache als dynamische Gestalt. Tübingen: Niemeyer.

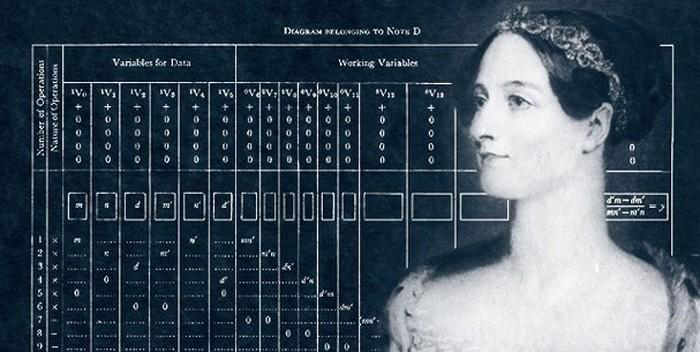

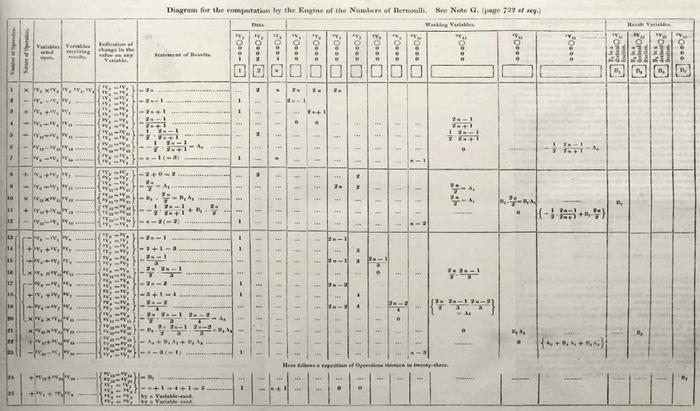

Lovelace, Ada Augusta (1843): Notes by A.A.I (Augusta Ada Lovelace). Taylor’s Scientific Memoirs, London, Vol. 3, 666-731; repr.: Charles Babbage and His Calculating Engines: Selected Writings by Charles Babbage and Others, P. Morrison and E. Morrison. New York: Dover Publications, 1961.

Nake, Frieder (2008): Surface, Interface, Subface. Three Cases of Interaction and One Concept. In: Uwe Seifert, Jin Hyun Kim, Anthony Moore (Eds.): Paradoxes of Interactivity. Perspectives for Media Theory, Human-Computer Interaction, and Artistic Investigations. Bielefeld: transcript, pp. 92–109.

Phelan, Peggy (1993): Unmarked: The Politics of Performance. London; New York: Routledge.

Plinius Secundus der Ältere, Gaius (1997): Naturkunde/ Historia Naturalis vol. 1-37: Vol. 35: Farben, Malerei, Plastik. Trans. and ed. Roderich König, with Gerhard Winkler. Dusseldorf/Zurich: Artemis & Winkler.

Saussure, Ferdinand de (1967): Grundfragen der allgemeinen Sprachwissenschaft. 2nd ed. Berlin: De Gruyter.

Schatzki, Theodore R. (2003): A New Societist Social Ontology. Philosophy of the Social Sciences 33 (2), 174-202.

Schatzki, Theodore R. (2016): Praxistheorie als flache Ontologie. In: Praxistheorie: Ein soziologisches Forschungsprogramm, ed. Hilmar Schäfer. Bielefeld: transcript, pp. 29-44.

Schechner, Richard (2002): Foreword: Fundamentals of Performance Studies. In Nathan Stucky and Cynthia Wimmer (eds.), Teaching Performance Studies. Southern Illinois University Press.

Shintaro, Miyazaki (2012): Algorhythmics: Understanding Micro-Temporality in Computational Cultures. Computational Culture 2 (28 September 2012).

Stjernfelt, Frederik (2008): Diagrammatology: An Investigation on the Borderlines of Phenomenology, Ontology and Semiotics. Dordrecht: Springer.

Vitruvius (1964): Vitruvii de archoitectura libri decem (Zehn Bücher über die Architektur). Trans. and ed. Curt Fensterbusch. Darmstadt: Primus.